Should we build AI data centers on the moon?

Spoiler alert: it could be 40% cheaper, according to some napkin math I did this weekend, prompted by Zuck’s comments on Thursday on the astronomical number of H100s Meta is investing in.

Here’s my thought process:

Bigger AI is better, and we’re in a race for Artificial General Intelligence (AGI). Bigger models need more compute which means bigger data centers. Energy makes up most of the running costs of a datacenter, and 40% of this is cooling. In space, the cooling is essentially done for you because space is already cold at just a few degrees above absolute zero.

But the moon is far. It takes light 1.3 seconds to get there. Round trip is 2.6 seconds. Way too slow. We won’t wait that long to talk to our AI. But what about Earth orbit? In synchronous orbit, you could keep an orbital data center in the dark (it gets hot in the sun, even in space). That’s about 35 thousand kilometers away, and light would take just 0.24 seconds for a round trip. That could work.

Ok, so latency checks out. Now let’s see how much money this could save:

Mark Zuckerberg said last Thursday that Meta was investing in “600k H100 equivalents of compute” for AI. The H100 is NVIDIA’s mainline GPU chip – the backbone for AI computing today. One DGX H100 server from NVIDIA costs about $30,000. Estimates say Zuck’s investment will cost $10B (and I’m guessing that’s probably just for the 350k they will get this year).

One DGX H100 uses 10kW of power. Running 24/7, that’s 87,600 kWh per year. In New York City, 1 kWh of power costs 24 cents – so Zuck’s energy bill for this would be about $12B per year. Since 40% of that is cooling, the cooling alone will run $5B a year.

But this assumes that GPUs like the H100 remain as energy hungry as they are today. At Arena, we’re applying our AI at some of the world’s top chip manufacturers to make GPUs pack more punch per watt.

For our napkin math though, let’s ignore these upcoming efficiency gains and say the $5B cooling bill translates straight to $5B of savings per year if Zuck were to use this hypothetical orbital AI datacenter.

Then we need to factor in launch costs:

How much would it cost to launch 600,000 H100s into space? Falcon Heavy payload launch costs to synchronous orbit are about $6,000 per kilo (using the SpaceX calculator here). One NVIDIA DGX H100 weighs 287 pounds, or 130 kilos, so that’s $780,000 just to launch one. But, launch costs are coming down quickly. NASA has a target to get launch costs down to tens of dollars per kilo by 2040. Let’s be conservative and say we don’t get quite there, but that we can get launch costs to $200 per kilo (As SpaceX speculates here). At this price, launching our 600k H100s would cost $15.6B.

So, in very broad strokes, you’d need to spend $15.6B once to save $5B per year. That’s a pretty solid business case.

But, while we’re at it…

Why do we have to launch the chips to space at all?

Why not make them in space?

Some folks, like our fellow Founders Fund portfolio company Varda, are already trying to make things, albeit small, light things - like pharmaceuticals - in space.

But at scale, space fabs for AI chips could make sense – for 3 simple reasons:

Launch costs would nearly disappear, because launching out of Earth’s gravity well is expensive, but not sending stuff down.

Fabricating the chip will be easier. Fabs need to be kept very clean, and the machines that do lithography and chemical vapor deposition to “print” logic gates onto Silicon need to pump out atmosphere to create a vacuum. Space is cleaner, and has no atmosphere.

Finally, rare earth materials are “rare” on Earth. In a future where we can tap near Earth asteroids for raw material, access to raw material will be easier in space. NASA’s Psyche mission is already en route to a nearby asteroid to explore this prospect.

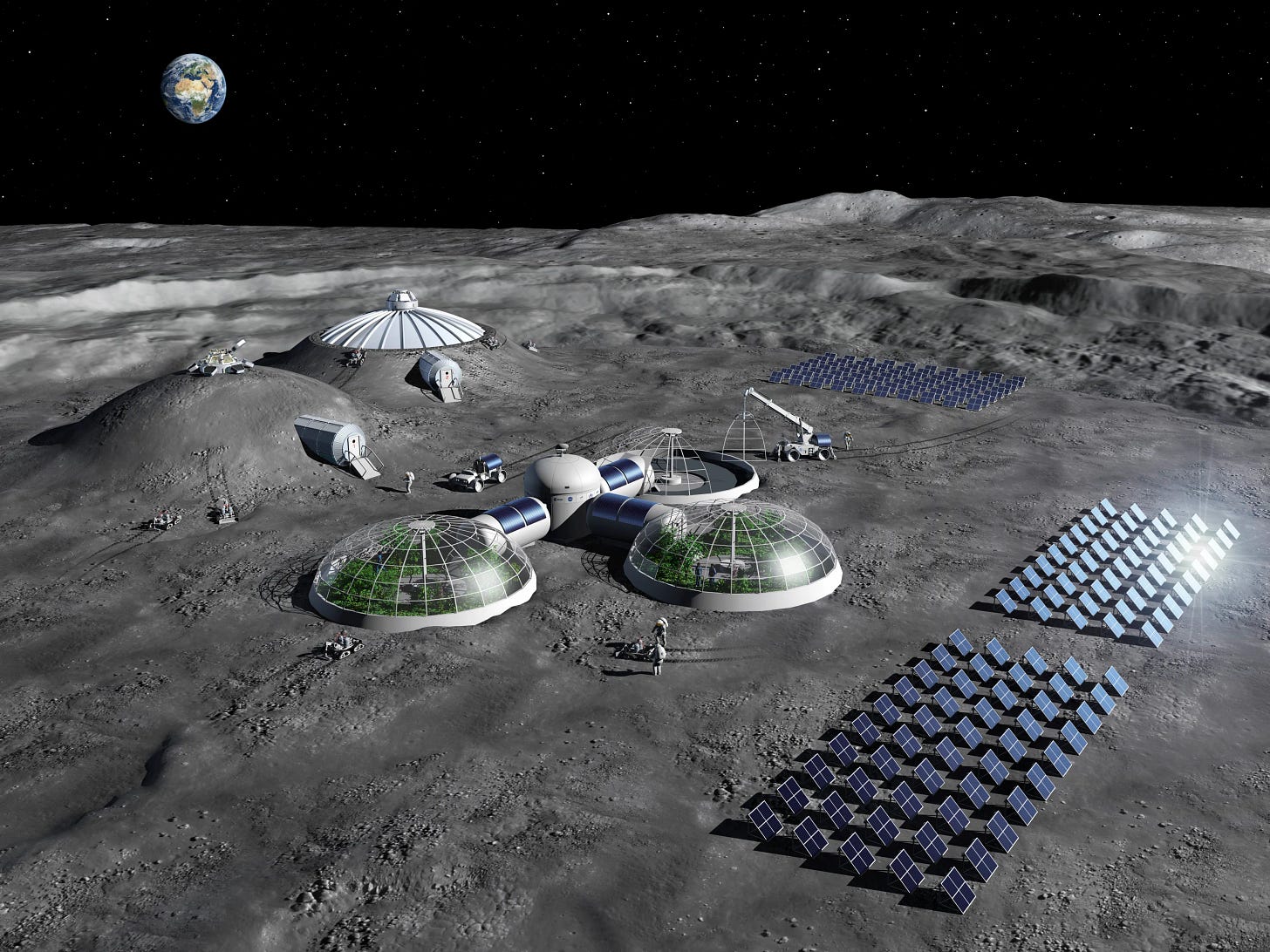

The CHIPS & Science act already committed $280B to build chip fabs in the U.S. NASA’s Artemis program has another $93B in funding to put a crewed base on the moon. The capital is flowing, the government is helping and the fundamentals make sense.

I’m willing to bet that we see AI data centers in orbit within the next 20 years.

Interesting thought experiment. A few big underlying assumptions (GPU cost in 5y, power cost in 5y, launch cost in 5y, and AI compute on data center vs edge in 5y) but all on the right side of the secular trend. Key qn will be the magnitude of changes

Very nicely articulated. Curious, why not in Antarctica or Arctic?